安装mysql

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

wget https://dev.mysql.com/get/mysql57-community-release-el7-11.noarch.rpm

yum localinstall mysql57-community-release-el7-11.noarch.rpm

rpm --import https://repo.mysql.com/RPM-GPG-KEY-mysql-2022

vim /etc/yum.repos.d/mysql-community.repo

修改 baseurl 为 https://mirrors.cloud.tencent.com/mysql/yum/mysql-5.7-community-el7-x86_64/

yum install -y mysql-community-server

|

安装Hive

1

2

3

4

5

6

7

8

9

10

11

12

13

|

tar -zxvf apache-hive-3.1.2-bin.tar.gz -C /opt/module/

vim /etc/profile.d/my_env.sh

export HIVE_HOME=/opt/module/apache-hive-3.1.2-bin

export PATH=$PATH:$HIVE_HOME/bin

source /etc/profile

|

配置hive-site.xml

路径:/opt/module/apache-hive-3.1.2-bin/conf/

新建文件 vim hive-site.xml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

| <?xml version="1.0" encoding="utf-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<configuration>

<property>

<name>javax.jdo.option.ConnectionURL</name>

<value>jdbc:mysql://hadoop100:3306/metastore?useSSL=false</value>

</property>

<property>

<name>javax.jdo.option.ConnectionDriverName</name>

<value>com.mysql.jdbc.Driver</value>

</property>

<property>

<name>javax.jdo.option.ConnectionUserName</name>

<value>root</value>

</property>

<property>

<name>javax.jdo.option.ConnectionPassword</name>

<value>root</value>

</property>

<property>

<name>hive.metastore.schema.verification</name>

<value>false</value>

</property>

<property>

<name>hive.metastore.event.db.notification.api.auth</name>

<value>false</value>

</property>

<property>

<name>hive.metastore.warehouse.dir</name>

<value>/user/hive/warehouse</value>

</property>

<property>

<name>hive.server2.enable.doAs</name>

<value>false</value>

</property>

<property>

<name>hive.exec.mode.local.auto</name>

<value>true</value>

</property>

<property>

<name>mapred.map.child.java.opts</name>

<value>-Xmx2048m</value>

</property>

</configuration>

|

初始化Hive元数据库

schematool -initSchema -dbType mysql -verbose

优化mapreduce

vim $HADOOP_HOME/etc/hadoop/mapred-site.xml

增加配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| <property>

<name>mapreduce.map.memory.mb</name>

<value>1536</value>

</property>

<property>

<name>mapreduce.map.java.opts</name>

<value>-Xmx1024M</value>

</property>

<property>

<name>mapreduce.reduce.memory.mb</name>

<value>3072</value>

</property>

<property>

<name>mapreduce.reduce.java.opts</name>

<value>-Xmx2560M</value>

</property>

|

配置beeline

配置core-site.xml 使其任意节点都可以访问hadoop

vim $HADOOP_HOME/etc/hadoop/core-site.xml

1

2

3

4

5

6

7

8

| <property>

<name>hadoop.proxyuser.root.hosts</name>

<value>*</value>

</property>

<property>

<name>hadoop.proxyuser.root.groups</name>

<value>*</value>

</property>

|

myhadoop.sh

路径:/opt/module/hadoop-3.1.3/sbin/myhadoop.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

| #!/bin/bash

if [ $# -lt 1 ]

then

echo "No Args Input..."

exit ;

fi

case $1 in

"start")

echo " =================== 启动 hadoop集群 ==================="

echo " --------------- 启动 hdfs ---------------"

ssh hadoop100 "/opt/module/hadoop-3.1.3/sbin/start-dfs.sh"

echo " --------------- 启动 yarn ---------------"

ssh hadoop101 "/opt/module/hadoop-3.1.3/sbin/start-yarn.sh"

echo " --------------- 启动 historyserver ---------------"

ssh hadoop100 "/opt/module/hadoop-3.1.3/bin/mapred --daemon start historyserver"

;;

"stop")

echo " =================== 关闭 hadoop集群 ==================="

echo " --------------- 关闭 historyserver ---------------"

ssh hadoop100 "/opt/module/hadoop-3.1.3/bin/mapred --daemon stop historyserver"

echo " --------------- 关闭 yarn ---------------"

ssh hadoop101 "/opt/module/hadoop-3.1.3/sbin/stop-yarn.sh"

echo " --------------- 关闭 hdfs ---------------"

ssh hadoop100 "/opt/module/hadoop-3.1.3/sbin/stop-dfs.sh"

;;

"status")

echo " =================== hadoop集群状态 ==================="

for host in hadoop100 hadoop101 hadoop102

do

echo =============== $host ===============

ssh $host jps

done

;;

"hive")

myhadoop.sh start

myhadoop.sh status

nohup hiveserver2 &

tail -f nohup.out

;;

*)

;;

esac

|

启动服务

myhadoop.sh hive

(关机前需执行 myhadoop.sh stop

beeline登录命令(另开窗口):

beeline -u jdbc:hive2://hadoop100:10000/db_hive -n root -p root

退出:!quit

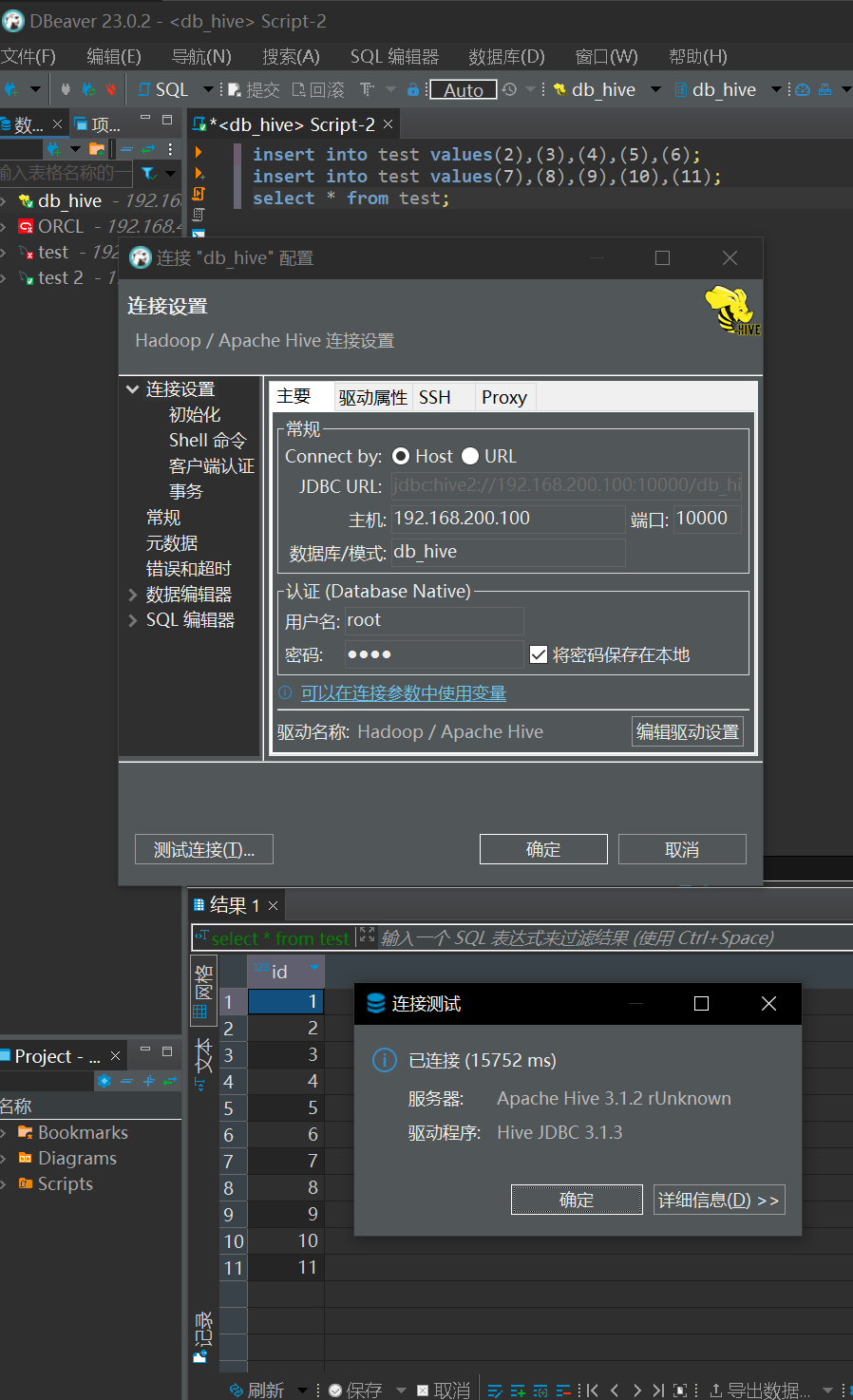

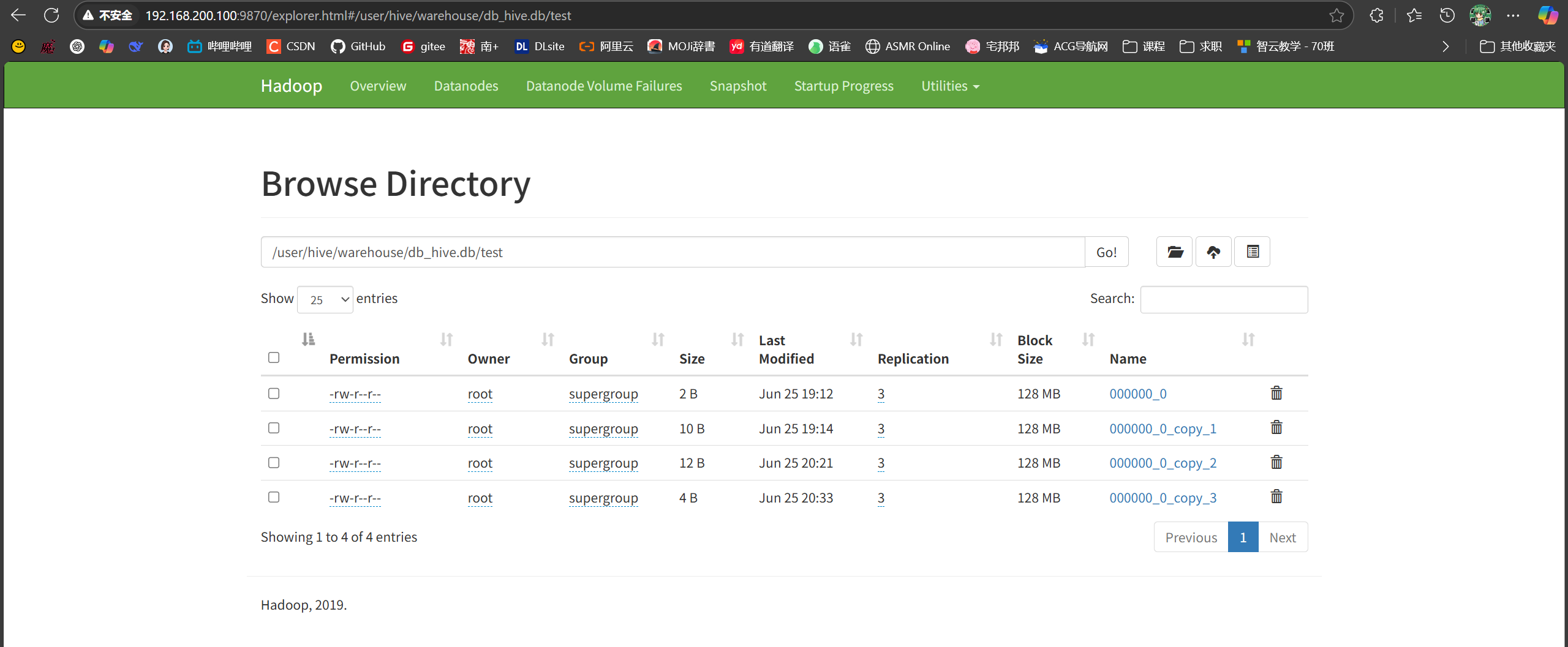

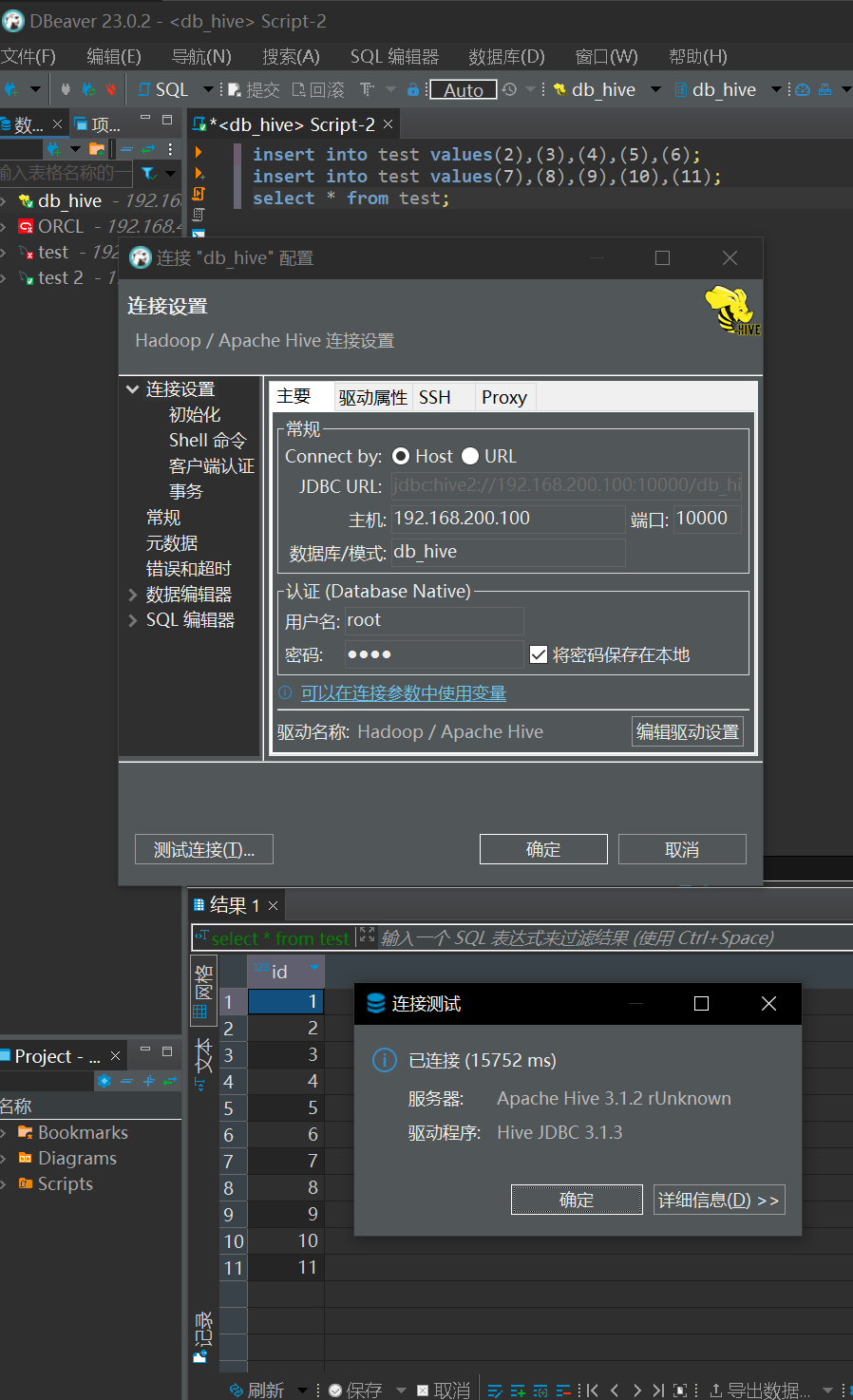

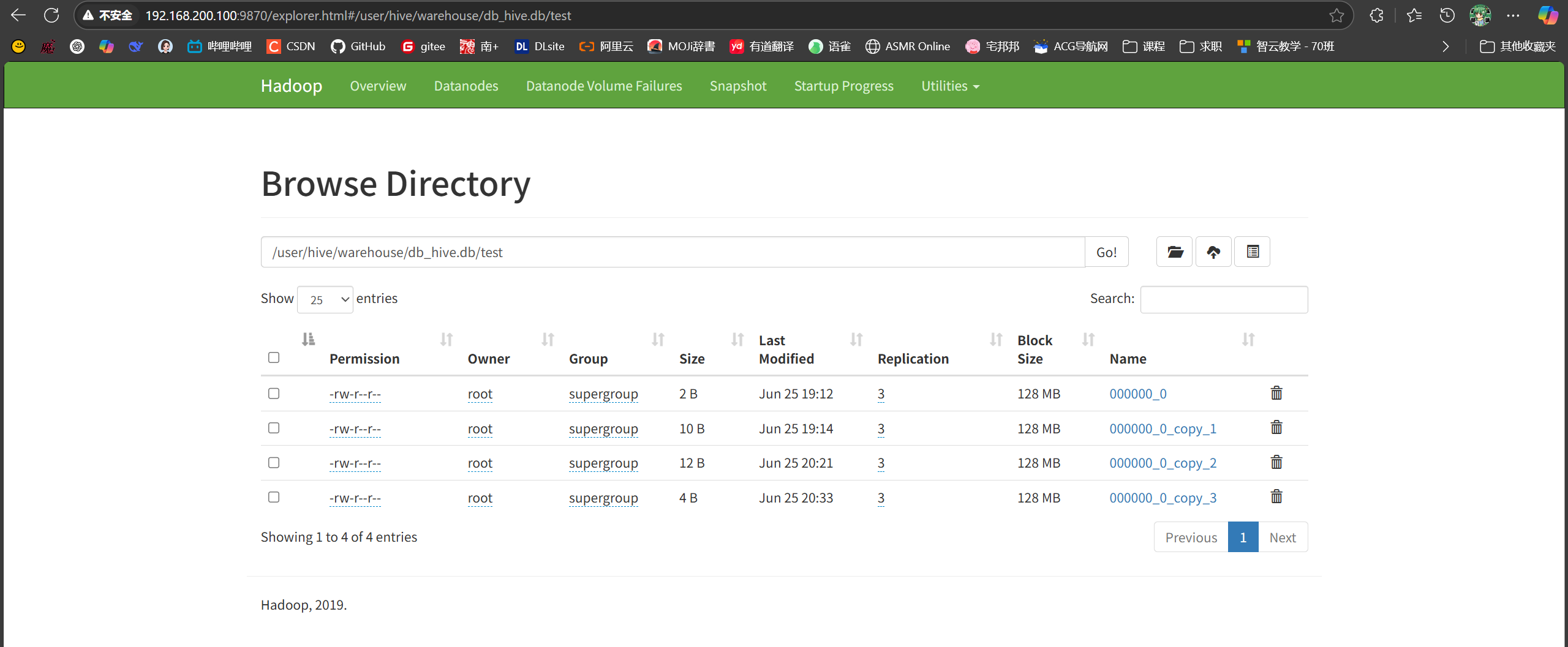

结果截图